Top 10 Most Common Machine Learning Applications Explained - 2024 | Exploring Common Machine Learning Algorithms

Exploring Common Machine Learning Algorithms: From Linear Regression to Ensemble Techniques

Machine learning has transformed the way we analyze data and make predictions, offering a range of algorithms that cater to various types of problems. From linear relationships to complex ensembles, these algorithms hold the key to unlocking insights and making

accurate forecasts. In this article, we'll delve into some of the most common machine learning algorithms, exploring their principles, applications, strengths, and limitations.

Linear Regression

Let's begin with the foundation of many predictive models, Linear Regression. This statistical method unveils relationships between two continuous variables by finding the best-fitting line through a set of data points. The equation y = b0 + b1*x governs this model, where y is the dependent variable, x is the independent variable, b0 is the y-intercept, and b1 represents the slope. Although initially designed for single variables, Linear Regression extends its prowess to multiple independent variables with y = b0 + b1x1 + b2x2 + … + bn*xn. This algorithm's power lies in predicting future observations based on historical data, often used for forecasting stock prices and product sales. However, Linear Regression demands a linear relationship between variables, is sensitive to outliers, and can fall short when dealing with complex data patterns.

Logistic Regression

Transitioning to classification tasks, Logistic Regression shines as a formidable tool. Instead of predicting values, this algorithm estimates probabilities for binary outcomes. By leveraging the logistic function, which maps inputs to probabilities between 0 and 1, Logistic Regression enables tasks like spam detection and churn prediction. Its versatility extends to multiclass classification through one-vs-all or softmax techniques. Logistic Regression's simplicity and interpretability are assets, though it assumes feature independence and necessitates appropriate feature engineering.

Support Vector Machines (SVMs)

When data separation isn't linear, Support Vector Machines (SVMs) step in. This supervised learning algorithm maximizes the margin between data points of different classes by defining decision boundaries. It's a boon for high-dimensional spaces and employs kernel tricks for non-linear separations. SVMs thrive with data noise and outliers, but their performance hinges on kernel choice and parameters, while also demanding substantial computation resources.

Decision Tree

Simple yet effective, Decision Trees are excellent for both classification and regression. Using tree-like structures, these algorithms make decisions by recursively splitting data based on feature values. Decision Trees offer easy visualization and interpretation, but they can overfit data if not pruned properly, leading to poor generalization on new data.

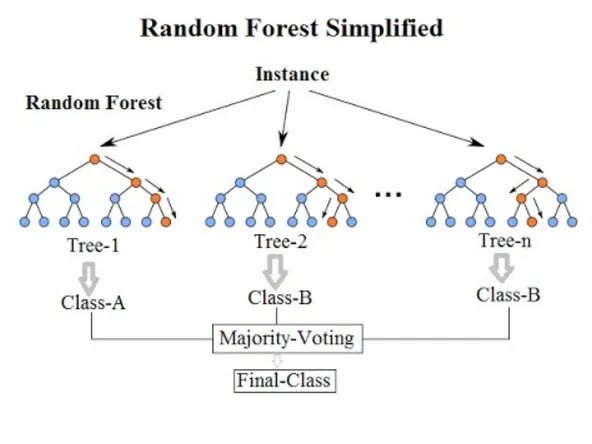

Random Forest

Random Forest, an ensemble of Decision Trees, minimizes overfitting by combining many models' predictions. This method handles high-dimensional and categorical data adeptly. However, its computational cost increases with the number of trees, and interpretation becomes complex compared to individual Decision Trees.

Naive Bayes

The Naive Bayes algorithm is a probabilistic approach to classification. Its simplicity and efficiency are standout features, making it suitable for high-dimensional datasets. Nevertheless, the "naive" assumption of feature independence can be limiting, as real-world data often exhibit correlations.

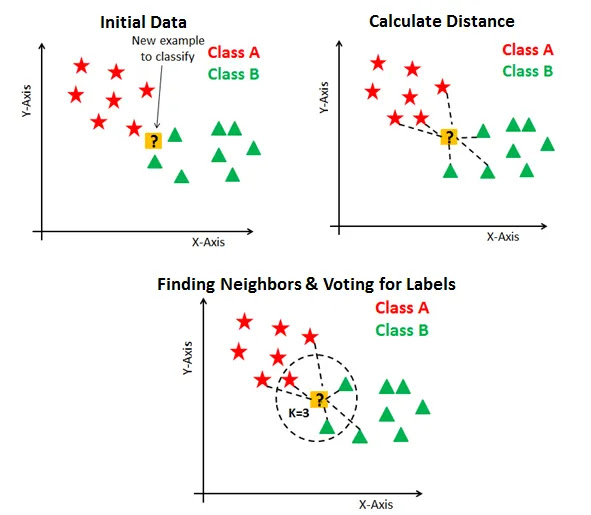

K-Nearest Neighbors (KNN)

KNN tackles classification tasks by identifying the nearest data points. While being simple and flexible, KNN's performance relies on the choice of neighbors (k) and can be computationally intensive for large datasets.

K-means

K-means, an unsupervised algorithm, clusters similar data points. Its scalability and simplicity make it a valuable tool for grouping data, yet it assumes spherical and equally sized clusters, making it less suitable for complex data distributions.

Dimensionality Reduction

In the quest to simplify complex datasets, Dimensionality Reduction techniques like PCA, LDA, and t-SNE shine. While they enhance machine learning performance and data visualization, they risk losing vital information during the process.

Gradient Boosting and AdaBoost

Lastly, ensemble techniques like Gradient Boosting and AdaBoost combine weaker models to create strong predictive tools. These iterative algorithms continually refine models to reduce errors and enhance overall performance. They are versatile in handling various data types and robust to outliers, but their computational complexity and parameter sensitivity require careful consideration.

.webp)

Nhận xét

Đăng nhận xét